Late-night editing sessions often test the patience and stamina of any creative professional. As exciting as new AI-powered tools like Runway ML can be, their quirks sometimes introduce unexpected obstacles that threaten entire projects. In my case, the dreaded export_task_failed error during the rendering of Gen-2 clips in Runway ML nearly derailed a week’s worth of visual storytelling—until a clever segment stitching workaround not only salvaged my timeline but also streamlined my workflow.

TLDR

While using Runway ML to render Gen-2 clips for a short film, I encountered the export_task_failed error repeatedly, halting progress. The issue originated during the clip exportation phase and seemed linked to project complexity or server-side hiccups. After hours of trial and error, stitching segments together manually—outside of Runway’s ecosystem—offered a reliable workaround that preserved the fidelity and order of my timeline. This method proved essential and may benefit others facing similar rendering failures.

The Problem: Gen-2 Export Task Failures

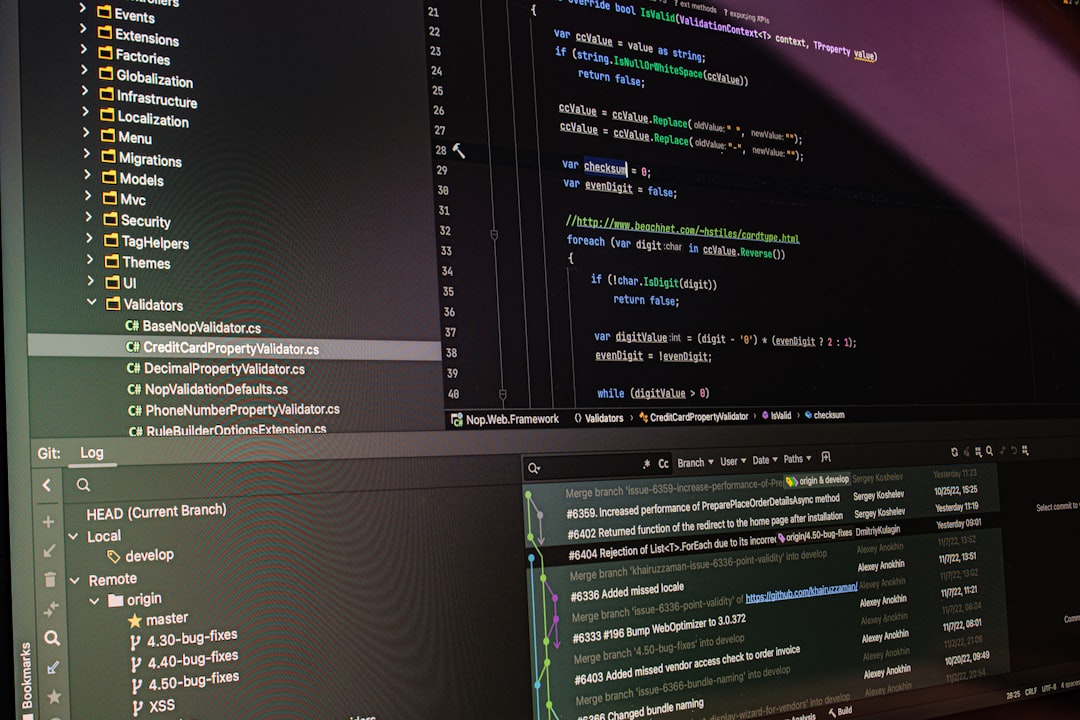

Runway ML has gained traction for providing intuitive, AI-powered video generation, especially with its Gen-2 model offering transformative text-to-video capabilities. While it empowers creators of all levels, it’s not without flaws. When I attempted to render multiple AI-generated clips into a cohesive narrative, the software repeatedly issued the following:

Status: export_task_failed

Description: There was a problem rendering your video. Please try again later.

This cryptic message left little to act on. Restarting the browser session, flushing the cache, minimizing timeline effects—nothing yielded consistent success. The task would fail unpredictably: sometimes minutes into the export, other times after full rendering seemed complete.

Initial Diagnostics: What Went Wrong?

Hoping for a quick resolution, I took a methodical approach:

- File Size: I verified clip size and resolution. Each Gen-2 clip was under 15 seconds, and output was capped at 1080p.

- Browser Conflicts: Tested across Chrome, Firefox, and Edge; cleared cookies and ran sessions in incognito modes.

- Account Issues: Downgraded to SD rendering and re-checked my subscription tier and storage allotments.

- Support Packet: Contacted Runway ML’s support with detailed logs and timestamps. Initial replies acknowledged general instability but lacked concrete solutions.

The takeaway? The error had no single trigger. Many users on forums and Discord channels reported similar behaviors, pointing to a likely back-end server timeout or queue prioritization bug. Workarounds shared by others included rendering in chunks, using shorter prompt text, and simplifying video transitions—with mixed results.

‘.render()’ Is Not Enough: Why Segments Matter

The core of the problem became clear: Runway ML’s Gen-2 engine could generate short, individual sequences effectively. The breakdown occurred when trying to export a full-project timeline with multiple AI-generated clips, transitions, and audio overlays. An internal function—likely chained under ‘.render()’ on their servers—exceeded the allowable execution threshold, flagging it as an export failure.

So rather than fight this architecture, I adapted to it.

The Solution: Manual Segment Stitching

Enter segment stitching—a technique borrowed from the film-editing world, where individual shots are rendered as standalone clips and later compiled externally. Here’s the exact method that saved my project:

Step 1: Break Down Your Timeline

Instead of rendering your entire video in one go, I separated my timeline into 5-10 second segments, each corresponding to a Gen-2 clip. This reduced the computational overload during export and gave me control over rendering each part independently.

Step 2: Render Individually in Runway ML

By queuing clips independently for export, most of them rendered successfully. Even when a few exports failed, re-trying only that segment was far less risky and time-consuming than re-rendering the full timeline.

Step 3: Stitch Using External Software

I used DaVinci Resolve, though any professional NLE would suffice (e.g., Adobe Premiere, Final Cut Pro). The process included:

- Importing all successfully rendered Gen-2 clips.

- Aligning them in sequence based on my storyboard.

- Re-inserting non-AI overlays (audio, text, transitions, etc.).

- Rendering the final project in one unified export.

By relieving Runway ML of the rendering burden, I ensured a successful final export without any additional AI overhead.

The Impact: Time Saved, Deadlines Met

Rendering blind is every editor’s worst nightmare. With client delivery approaching, this fix wasn’t just a learning experience—it avoided a serious delay. What would’ve spiraled into a 72-hour do-over turned into an efficient, transparent routine. Future projects now follow this approach from the start, avoiding export bottlenecks entirely.

Why This Could Be a Feature (Not a Flaw)

Interestingly, this “problem” highlighted a broader architectural concern in cloud-based AI rendering platforms: scalability versus flexibility. Gen-2 thrives in obscured complexity; users don’t access raw files, codecs, or frame-level control until late in the pipeline. But by modularizing exports into manageable pieces, the platform becomes more robust in real-world use.

There’s a lesson here for Runway ML’s roadmap:

- Official Segment Rendering Mode: Let users flag segments for auto-export and download.

- Export Health Diagnostic: Indicate export readiness based on timeline complexity.

- Fail-Safe Requeue System: Automatically retry failed segments under smarter constraints.

Until then, treating Runway as a generation tool—rather than an end-to-end suite—may be the wisest approach.

Final Thoughts

Although frustrating at the start, the export_task_failed error nudged me toward a scalable, modular post-production strategy that continues to pay dividends. It’s a strong reminder that relying too deeply on one tool—without understanding its limitations—can bottleneck even the best workflows.

If you’re working with AI-generated video content, especially in high-pressure scenarios, consider adopting a segment-first mindset from the outset. Not only will your rendering succeed more reliably, but your creative control will increase tenfold.

Stay flexible. Work smart. And always have a backup export plan.