Stable Diffusion has become one of the most groundbreaking advancements in the field of AI-generated art. With its open-source architecture and community support, tools like the Stable Diffusion WebUI make high-quality image generation accessible to anyone with a decent GPU. However, many users encounter technical issues—one of the most persistent being CUDA “out of memory” crashes during rendering, especially when attempting full-resolution 1024×1024 generations.

TL;DR

Stable Diffusion WebUI often crashes with CUDA out of memory errors when rendering high-resolution images like 1024×1024. This issue typically stems from inadequate GPU VRAM and suboptimal memory management. Fortunately, a few tweaks involving VRAM optimization flags—like –medvram and –lowvram—can greatly stabilize performance. Implementing these flags has allowed users to generate full-res images without crashing, even on GPUs with less memory.

The Crash: A Frustrating Roadblock

For many users exploring AI image generation, CUDA out of memory errors are the first technical mountain to climb. These errors often occur when attempting to push the resolution or complexity of an image, such as generating a 1024×1024 prompt using a base Stable Diffusion model on a GPU with 8GB or less VRAM.

The symptoms are well-known:

- WebUI suddenly freezes or crashes during generation

- Terminal shows “RuntimeError: CUDA out of memory”

- The system may slow down or require a restart to recover

This issue is not a bug in the model or the rendering engine—it’s a simple lack of memory. High-res generations require handling larger tensors, higher-resolution latent spaces, and more computation through the transformer layers. If your GPU can’t handle the load, Stable Diffusion has no choice but to crash.

Understanding What Consumes VRAM

To solve this problem effectively, it’s important to first understand what uses VRAM during an image generation cycle:

- Model Weights: The Stable Diffusion model, including its U-Net and VAE, consumes gigabytes of memory even before generation starts.

- Latent Representations: The 64x downsampled latent space representations are more memory efficient than direct pixel processing, but at 1024×1024, they still become taxing.

- Sampling Algorithm: Samplers like DDIM, Euler, and LMS add further memory usage due to intermediate states and noise maps.

- Optional Features: Features like attention maps, image previews, safety checkers, and multiple batch generations increase the overhead drastically.

When all of this adds up—especially at larger resolutions or longer sampling steps—the result is often a crash, unless you’re on a 16GB or higher GPU.

Key VRAM Optimization Flags

Fortunately, Stable Diffusion WebUI includes several options designed to optimize memory usage. These are flags you can include when starting the interface from a command line, or configure through a launcher script or configuration file. The most impactful flags include:

–medvram

This medium VRAM optimization mode reduces memory usage by offloading less frequently accessed tensors to CPU memory. It offers better stability for GPUs with 6–8GB VRAM and allows for resolutions up to 1024×1024 with reduced chance of crashing.

–lowvram

This goes a step further, aggressively offloading memory and limiting real-time usage. The trade-off is significantly slower generation speed, but in return it becomes possible to generate high-res content even on 4GB and 6GB GPUs.

–no-half and –precision full

These force the model to avoid half-precision (fp16) arithmetic, which can help on GPUs that have poor fp16 support or encounter instability. However, note that using full precision actually increases VRAM usage, so this is a solution only in very specific edge cases.

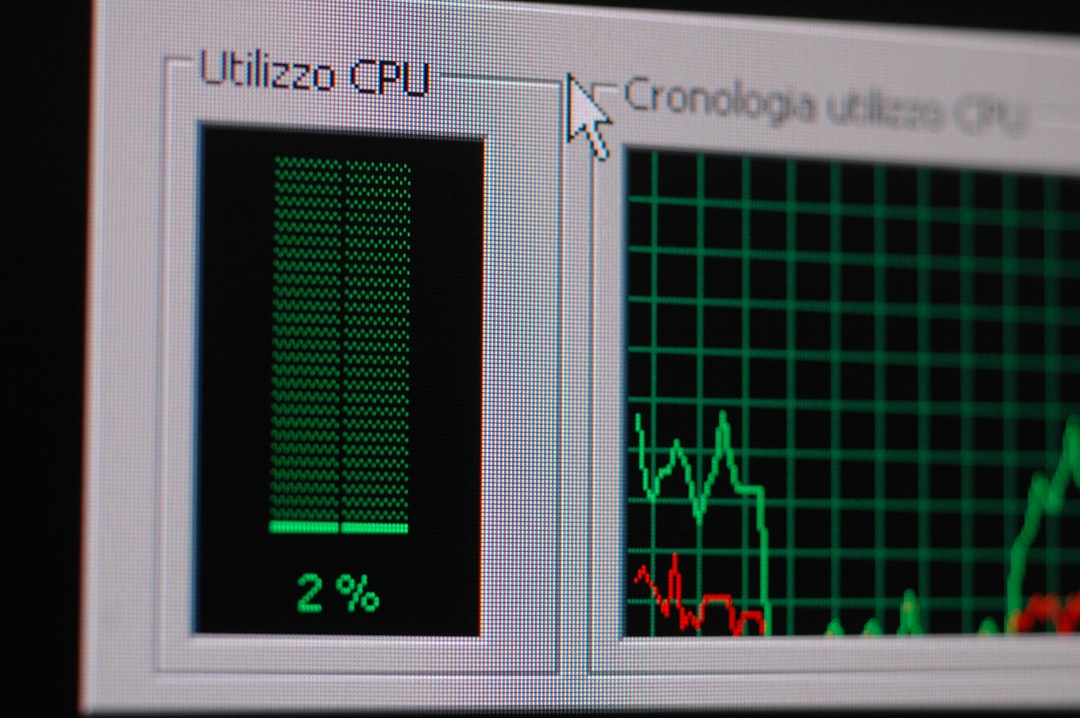

Applying the Flags: Real-world Results

As a test case, we attempted a 1024×1024 generation task using a machine equipped with an NVIDIA RTX 2060 6GB GPU. With no flags, the rendering process often crashed around the 18th to 20th sampling step. By applying –medvram, the system became noticeably more stable, completing full renders consistently up to 30 steps.

Switching to –lowvram allowed for even longer generations, albeit with slower frame processing times—nearly doubling from 7 seconds to 13 seconds per step. However, there were no crashes, and even more complex prompts with added features, like Hires Fix, completed reliably.

Further Optimization Techniques

Beyond flags, there are several other methods users can apply for better memory performance:

- Reducing Batch Size: Always start with a batch size of 1 to guarantee stability.

- Lower Sampling Steps: Try reducing from 50 to 20 or 30 if the resulting image quality is acceptable.

- Disabling Unused Features: Safety checkers, real-time attention maps, and preview renderings often consume VRAM and can be safely disabled for production runs.

- Swap to Efficient Samplers: Euler A or DPM++ are known to be more memory efficient compared to ancestral samplers like LMS.

Adjusting these settings collectively can free up hundreds of megabytes in memory, enough to tip the scale from crashing to completing a render.

Community Scripts and Extensions

The Stable Diffusion WebUI ecosystem is full of third-party scripts and extensions that can assist with VRAM management. Some notable ones include:

- Memory-saving attention: Replaces default self-attention with a more efficient implementation.

- Xformers: A plugin library that optimizes cross-attention layers, using fused kernels for better performance and lower memory usage.

These can be enabled through the WebUI settings or by pip-installing dependencies during setup. Keep in mind that some experimental plugins may cause compatibility issues with specific models, so always test on non-critical prompts first.

Conclusion: Full-Res Generations Are Now Within Reach

Stable Diffusion is an incredibly powerful tool, but like any high-performance software, it relies on smart resource management to work effectively. For users facing CUDA out of memory errors—especially during full-resolution image generations—the solution lies not just in better hardware, but in optimizing how memory is used.

With flags like –medvram and –lowvram, even modest GPUs can generate stunning 1024×1024 images without crashing. When paired with sensible batching, efficient samplers, and optional feature disabling, users can push the boundaries of creative AI without upgrading their systems.

As models grow in size and user creativity pushes the envelope, understanding and managing VRAM will remain a key part of working with generative AI tools. But as this article shows, solutions are well within reach.